Recently, I manually attached pre-existing EBS volumes to EC2 instances in an EKS (k8s) cluster. My goal was to expose these EBS volumes as k8s persistent volumes using a custom storage class. I picked the CSI local volume driver/provisioner to do the gluing.

The ride was fun, but also a bit bumpy. Here, I am sharing the config and commands I ended up using. I think this setup is rather tidy and seems to work well when you want to manually manage the lifecycle of an EBS volume and its relationship to a Kubernetes environment. (In many other cases, when the Kubernetes cluster is supposed to have authority over the EBS volumes, you’d probably use something like the EBS CSI driver with its slightly involved privilege setup).

In the following steps

- we see how to create a new k8s storage class called

fundisk-elb-backed(you can pick a different name, of course :-)). - we’ll be running the CSI local volume driver as daemonset on each machine in the k8s cluster. It will be configured to automatically discover any pre-formatted volumes mounted at a path with the pattern

/mnt/disks-for-csi/*.

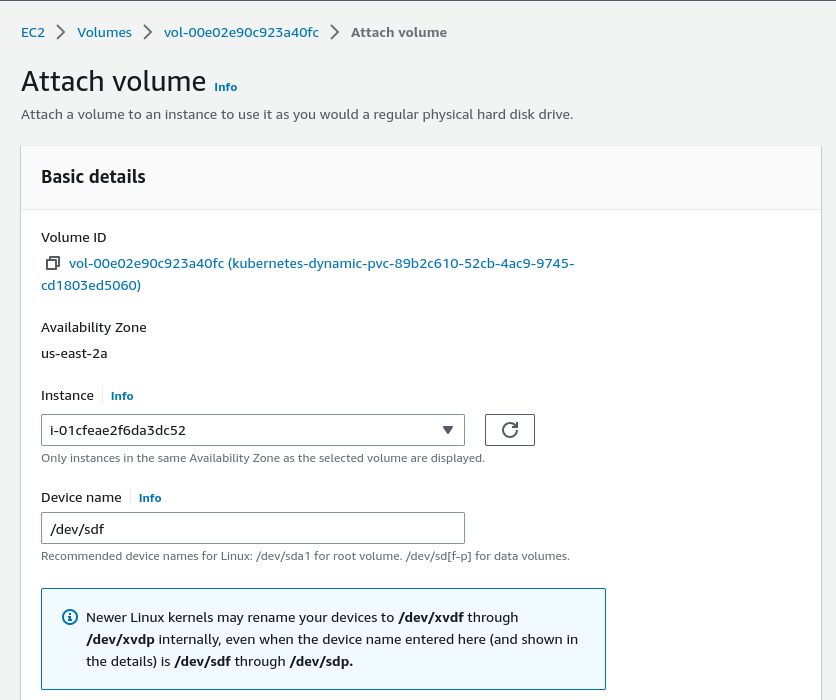

Attach EBS volumes to EC2 instances

I know you know you have to do this :-). But for completeness: attachem! I used the browser UI to do the attachment. In my case, I attached

vol-0319df353ceaeac9a to i-09782185499dsae1b (us-east-2b)

vol-00e03e90c923a40fc to i-01cfeae2f6da3dc52 (us-east-2a)

I used the default setting of /dev/sdf (/dev/xvdf). Example screenshot:

Create and mount filesystems

I mentioned “pre-formatted” above. What does that mean? We’re going to use the driver with volumeMode set to "Filesystem" (as opposed to "Block"). Of course you might want to make a different choice. Also see docs. My goal was to

- offer each persistent volume with a ready-to-use filesystem (ext4 in this case) to k8s applications

- not have the CSI local volume driver mutate the volume contents (it can create a filesystem for you, but I wanted to try without this magic)

We’re also going to follow this recommendation:

For volumes with a filesystem, it’s recommended to utilize their UUID (e.g. the output from ls -l /dev/disk/by-uuid) both in fstab entries and in the directory name of that mount point

So. Do the following steps as root on each of the machines that you have attached a volume to. Tip: connecting via EC2 dashboard “session manager” works reasonably well and gives you a browser-based terminal. sudo su yields root.

Maybe make a quick check with fdisk -l to see if /dev/sdf appears to be what you expect it to be. If it looks right: create a filesystem on it (you know the deal: you don’t want to run this on the wrong device):

$ mkfs.ext4 /dev/sdf

mke2fs 1.42.9 (28-Dec-2013)

...

Creating journal (32768 blocks): done

Writing superblocks and filesystem accounting information: done

Then create directory /mnt/disks-for-csi/<filesystem-uuid> as well as an fstab entry:

FS_UUID=$(sudo blkid -s UUID -o value /dev/sdf)

echo $FS_UUID

mkdir -p /mnt/disks-for-csi/$FS_UUID

mount -t ext4 /dev/sdf /mnt/disks-for-csi/$FS_UUID

echo UUID=`sudo blkid -s UUID -o value /dev/sdf` /mnt/disks-for-csi/$FS_UUID ext4 defaults 0 2 | sudo tee -a /etc/fstab

Quick peek into /etc/fstab for confirmation:

$ cat /etc/fstab

#

UUID=87a2b2dc-ac08-49e9-a3e8-228b6af71acf / xfs defaults,noatime 1 1

UUID=bea63a7a-38d3-47db-aae6-d9a7fcfa6a13 /mnt/disks-for-csi/bea63a7a-38d3-47db-aae6-d9a7fcfa6a13 ext4 defaults 0 2

Note that we did mkfs.ext4 /dev/sdf followed by blkid -s UUID -o value /dev/sdf. In this case, the blkid command returns

a filesystem-level UUID, which is retrieved from the filesystem metadata inside the partition. It can only be read if the filesystem type is known and readable.

which is what we want. Kudos to this SO answer.

k8s: create service account, configmap, and daemonset for the volume driver

I assume that at least one node in your cluster now has an EBS-backed filesystem mounted at /mnt/disks-for-csi/<fs-uuid>.

Let’s move on to the k8s side of things. I fiddled for a while with the YAML documents shown below and went through a number of misconfigurations that were not always easy to debug. Before you start changing paths and names, make sure you understand how the CSI local volume driver performs volume discovery.

Three configuration artifacts need to be applied (especially the first two are based on manual trial and error, in addition to being based on docs and also reading code a bit):

csi-localvol-driver-daemonset.yamlcsi-localvol-config.yamlcsi-localvol-service-account.yaml

Now for the contents.

csi-localvol-driver-daemonset.yaml :

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: local-volume-provisioner

namespace: kube-system

labels:

app.kubernetes.io/name: local-volume-provisioner

spec:

selector:

matchLabels:

app.kubernetes.io/name: local-volume-provisioner

template:

metadata:

labels:

app.kubernetes.io/name: local-volume-provisioner

spec:

serviceAccountName: local-volume-provisioner

containers:

- image: "registry.k8s.io/sig-storage/local-volume-provisioner:v2.5.0"

imagePullPolicy: "Always"

name: provisioner

securityContext:

privileged: true

env:

- name: MY_NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: MY_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: metrics

containerPort: 8080

volumeMounts:

- name: provisioner-config

mountPath: /etc/provisioner/config

readOnly: true

- mountPath: /mnt/disks-for-csi

name: fundisk-elb-backed

mountPropagation: "HostToContainer"

volumes:

- name: provisioner-config

configMap:

name: local-volume-provisioner-config

- name: fundisk-elb-backed

hostPath:

path: /mnt/disks-for-csi

csi-localvol-config.yaml:

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: fundisk-elb-backed

provisioner: kubernetes.io/no-provisioner

volumeBindingMode: WaitForFirstConsumer

reclaimPolicy: Retain

---

apiVersion: v1

kind: ConfigMap

metadata:

name: local-volume-provisioner-config

namespace: kube-system

data:

nodeLabelsForPV: |

- kubernetes.io/hostname

storageClassMap: |

fundisk-elb-backed:

# In this directory, expect at least one mounted (ext4) partition, e.g.

# /mnt/disks-for-csi/422eaa2c-8284-41a4-a4fb-878ed6ae9802

# Note that volumeMode: Filesystem is default, i.e. this expects to

# have a filesystem. I prepared that.

hostDir: /mnt/disks-for-csi

mountDir: /mnt/disks-for-csi

csi-localvol-service-account.yaml :

apiVersion: v1

kind: ServiceAccount

metadata:

name: local-volume-provisioner

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: local-storage-provisioner-node-clusterrole

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["watch"]

- apiGroups: ["", "events.k8s.io"]

resources: ["events"]

verbs: ["create", "update", "patch"]

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: local-storage-provisioner-node-binding

namespace: kube-system

subjects:

- kind: ServiceAccount

name: local-volume-provisioner

namespace: kube-system

roleRef:

kind: ClusterRole

name: local-storage-provisioner-node-clusterrole

apiGroup: rbac.authorization.k8s.io

Apply k8s resources

Yes.

± kubectl apply -f csi-localvol-service-account.yaml

serviceaccount/local-volume-provisioner created

clusterrole.rbac.authorization.k8s.io/local-storage-provisioner-node-clusterrole created

clusterrolebinding.rbac.authorization.k8s.io/local-storage-provisioner-node-binding created

± kubectl apply -f csi-localvol-config.yaml

storageclass.storage.k8s.io/fundisk-elb-backed created

configmap/local-volume-provisioner-config created

± kubectl apply -f csi-localvol-driver-daemonset.yaml

daemonset.apps/local-volume-provisioner created

A bit of inspection

Let’s see if the daemonset running the driver is running smoothly:

$ kubectl describe daemonset/local-volume-provisioner -n=kube-system

...

Desired Number of Nodes Scheduled: 4

Current Number of Nodes Scheduled: 4

Number of Nodes Scheduled with Up-to-date Pods: 4

Number of Nodes Scheduled with Available Pods: 4

Number of Nodes Misscheduled: 0

...

Containers:

provisioner:

Image: registry.k8s.io/sig-storage/local-volume-provisioner:v2.5.0

...

Looks good. You may want to read the logs of an individual pod here, with for example:

± kubectl logs daemonset/local-volume-provisioner -n kube-system

Found 2 pods, using pod/local-volume-provisioner-lz228

I0404 09:21:16.325495 1 common.go:348] StorageClass "fundisk-elb-backed" configured with MountDir "/mnt/disks-for-csi", HostDir "/mnt/disks-for-csi", VolumeMode "Filesystem", FsType "", BlockCleanerCommand ["/scripts/quick_reset.sh"], NamePattern "*"

...

Let’s see if k8s picked up our custom storage class:

$ kubectl get storageclass

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

fundisk-elb-backed kubernetes.io/no-provisioner Retain WaitForFirstConsumer false 59d

yes! (you can see I ran this command much later :-)).

Start using those volumes!

Use your k8s foo to deploy something that makes use of k8s persistent volumes. Use the new storage class. I deployed a Prometheus StatefulSet using this new storage class. The following command and its output summarizes everthing in loving detail:

$ kubectl get pvc --namespace monitoring

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

prometheus-k8s-db-prometheus-k8s-0 Bound local-pv-81a1251e 19Gi RWO fundisk-elb-backed 3d

prometheus-k8s-db-prometheus-k8s-1 Bound local-pv-88739f7a 9915Mi RWO fundisk-elb-backed 3d

Cool.

Happy to read your comments.

Bye!

Leave a Reply